Developer / Designer / Human-Centred Problem Solver

unveiling the power of digital experience through cutting-edge technology and creativity.

2025

Auditory-Haptic Illusion

A psychophysical Study Investigating Auditory-Haptic Illusion

Physiological Experiment

VR Application

| Role |

|---|

| Researcher / VR Developer |

| Tech Stack |

| Unity / VR / Novint Falcon |

This project explored whether auditory cues could change how stiff a virtual object feels when pressed. The goal wasn’t to simulate stiffness with hardware, but to shift perception through multisensory experience. It challenged a core UX question: can we design for sensation without physical fidelity?

Context & Challenge

While haptic realism in VR often depends on expensive actuators, I was curious whether softness or hardness could be 'felt' differently using only sound. The challenge was to create a controlled experiment that stripped away variables and tested a simple idea: do brief, well-timed sound bursts alter our sense of material resistance?

Experiment

- I designed a VR pressing task using Unity and an HTC Vive, connected to a Novint Falcon to provide consistent force feedback across all trials. Participants interacted with two virtual objects and judged which felt stiffer. One object had discrete auditory grains triggered during the press; the other had none. Across 490 randomized trials per user, I varied both grain count and physical stiffness. I also collected post-task reflections about participants' sensory strategies: did they rely on touch, sound, or visual cues?

- Participants were asked to compare object pairs in a 2AFC (Two-Alternative Forced Choice) structure. Half judged which object felt stiffer, the other half judged softness. Post-trial interviews revealed that most participants instinctively relied on haptic input. Some noticed the sound, but rarely associated it with material difference—despite its precise timing and structure.

Key Findings

- Statistical analysis revealed no significant perceptual shift caused by sound across all participants. In a few isolated cases, higher grain count seemed to increase perceived stiffness, contradicting the original hypothesis. The illusion’s failure highlighted the strength of modality dominance in perception—and the need for narrative coherence in cross-modal design.

Reflection

- While the result didn’t confirm the hypothesis, the project offered critical insights into how people make sense of multisensory feedback—and when they don’t. It reinforced that designing for perception means engaging not just with signal fidelity, but with meaning-making and user expectation.

2024

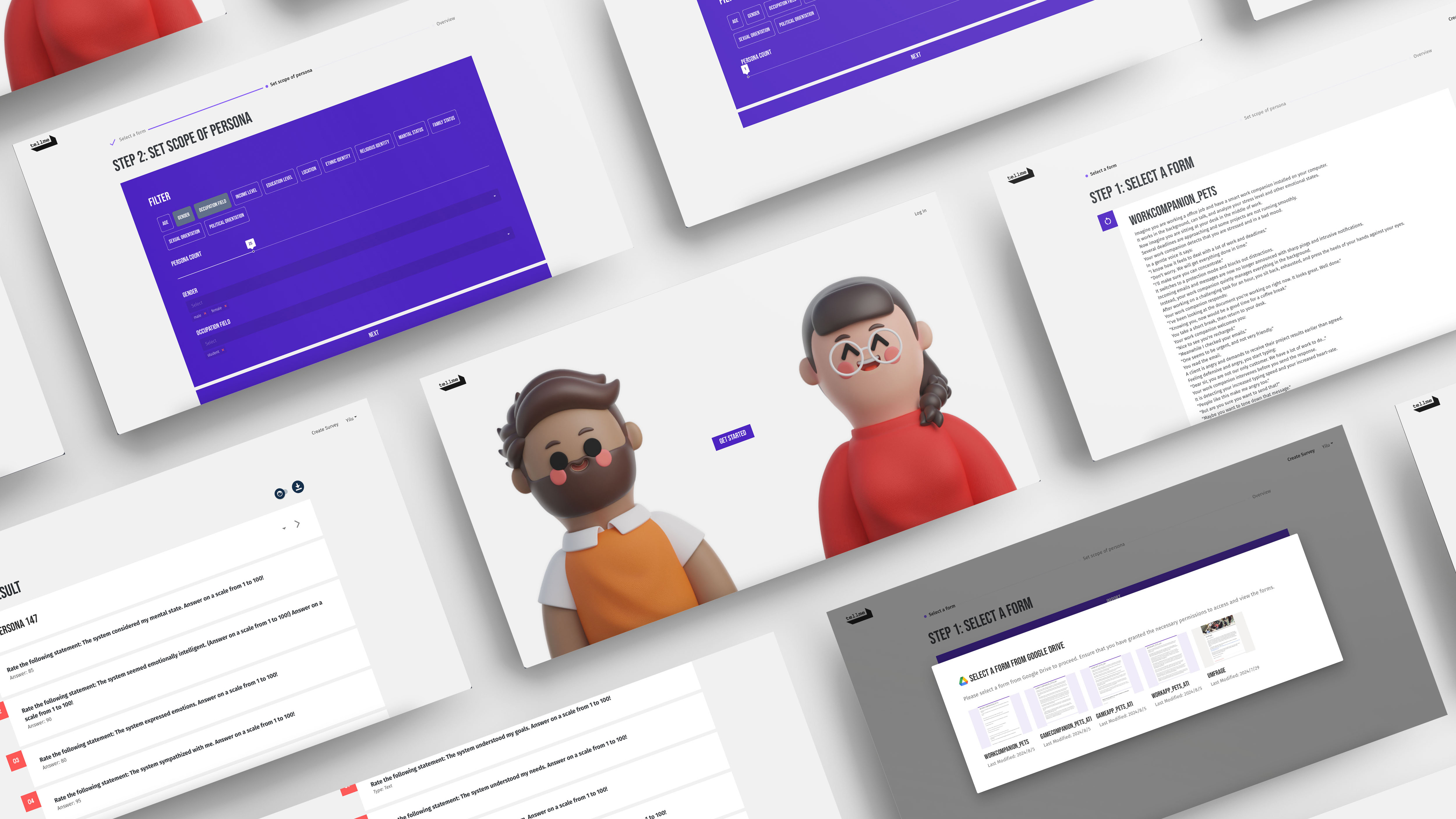

Tellme

Simulating Survey Data with LLM-Based Personas

UX Research

Product Design

Humam-AI Interaction

| Role |

|---|

| Co-Founder / UX Researcher / Frontend Engineer |

| Tech Stack |

| React / Node.js / Django / Docker / LLM |

Tellme began with a UX research question: how might we help researchers test and iterate surveys more efficiently, especially when they can't access target users? Inspired by the growing capabilities of LLMs, we hypothesized that we could simulate personas to generate realistic, structured responses to real-world surveys.

Identifying the Problem

Through interviews with researchers, we surfaced key pain points: pre-testing surveys is slow, unrepresentative, and often biased. Users lacked tools to preview how different populations might interpret questions. Our insight was that what researchers needed wasn’t just better survey tools—but a way to simulate diverse user mindsets without conducting full deployments.

Design Strategy

- We focused on designing a persona system that could be both expressive and statistically grounded. Personas were defined through demographic and psychological attributes including Big Five traits mapped to real-world distributions.

- Prompt construction for the LLMs was treated as part of the UX layer: we embedded readable trait definitions, minimized hallucinations, and provided transparency into how responses were generated and validated.

Validation

To evaluate the system’s usefulness and credibility, we replicated published survey studies using LLM-generated data. In multiple trials, synthetic responses produced similar distribution trends and latent structures to human data—giving us confidence in the platform’s viability for exploratory and prototyping use cases.

Key Insights & Reflection

- Users were less interested in pure realism, and more concerned with control, transparency, and traceability—they needed to understand where the data came from and why a persona answered the way it did.

- Designing for trust in AI-driven systems required clear affordances, consistent behavior, and thoughtful error messaging when outputs failed expectations.

- Tellme pushed me to think about UX as a way of shaping how people work with uncertainty, abstraction, and emerging AI behaviors.

2023

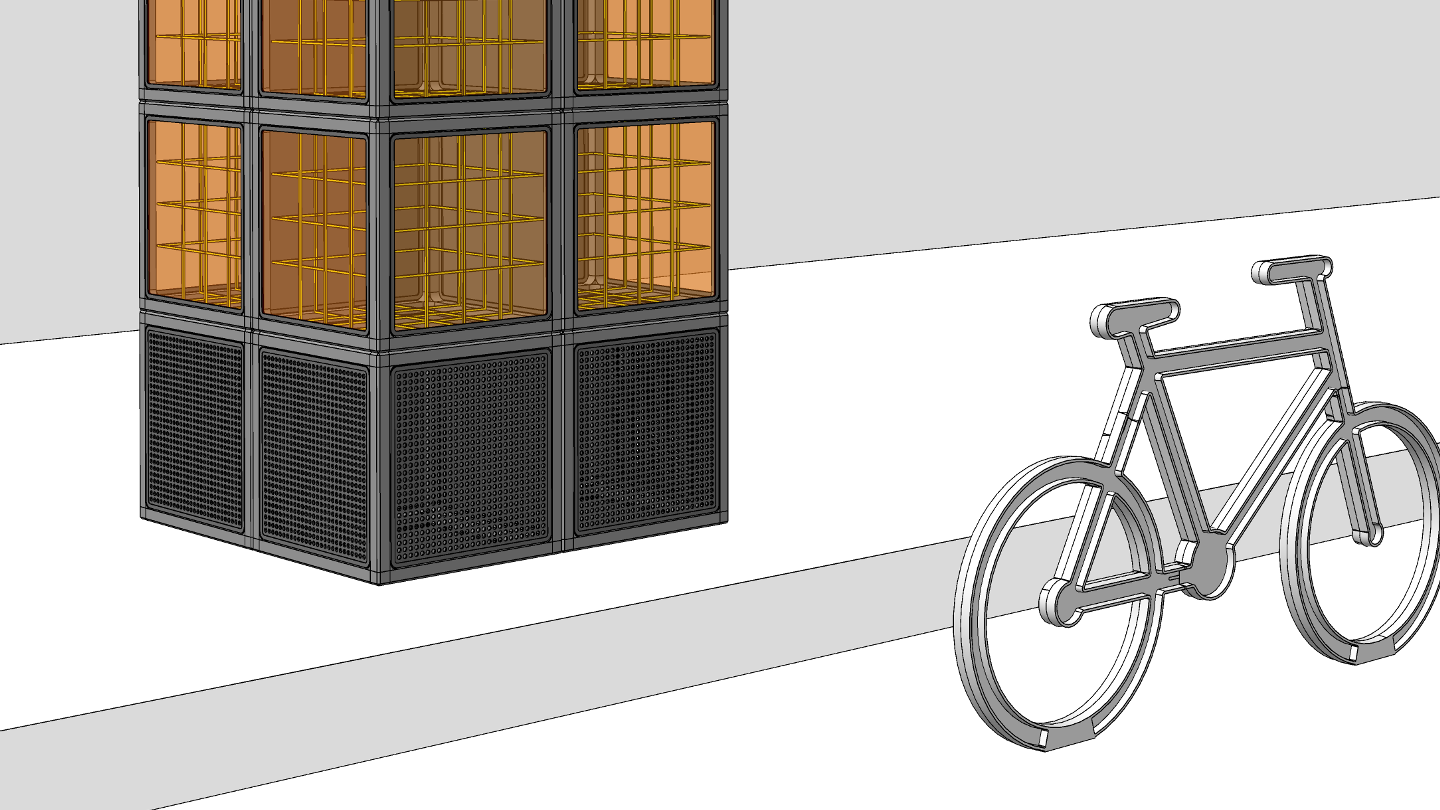

Might Tower

A Speculative Safety System for Cyclists in Urban Futures.

Speculative Design

| Role |

|---|

| Service Designer |

| Tech Stack |

| Physical Prototyping / 3D Printing / Scenario Design / User Interviews |

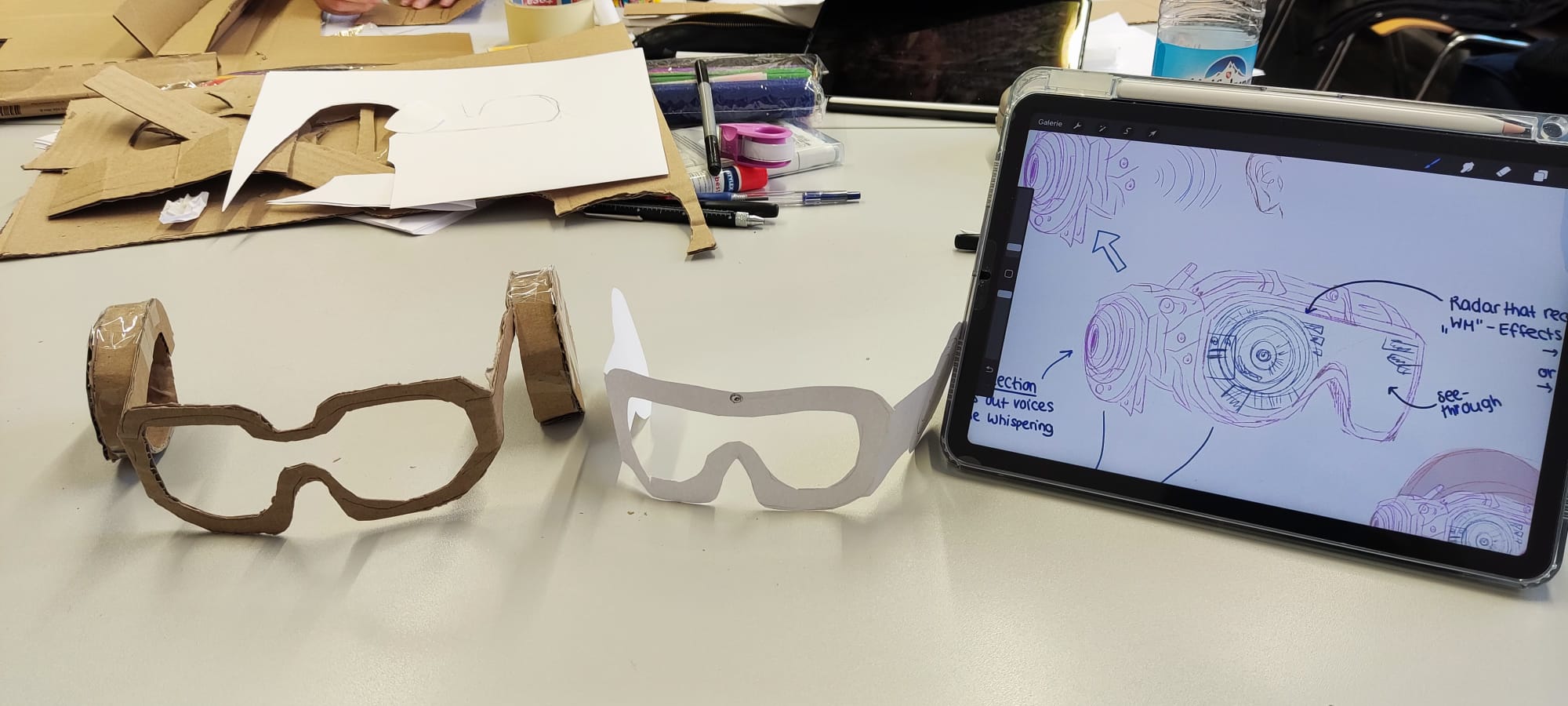

Set in a speculative 2035 Munich where a surreal 'Whispering Mist' distorts perception, Might Tower was conceived as a networked infrastructure system that helps cyclists navigate through reduced visibility and psychological disorientation.

Problem Framing

The Whispering Mist affects visibility, cognition, and perception. Cyclists are especially vulnerable, struggling to stay visible to other vehicles and maintain spatial orientation. Interviews with both frequent and occasional cyclists uncovered fear and anxiety while navigating mist-dense areas. Traditional gestures and communication break down, and cyclists often push their bikes instead of riding.

Research & Ideation

- We started with a participatory approach—gathering open-ended feedback on potential ideas and mapping emotional reactions to the mist. Initial ideas included smart helmets, wearable filters, and AR glasses. But deeper reflection pointed toward urban-scale infrastructure as the most effective and inclusive solution—serving not just individuals, but entire pathways.

Design Solution

- Might Towers are placed every 10 meters along bike lanes and activate via motion sensing. When a cyclist approaches, the tower emits a focused red light beam, ensuring the cyclist remains visible even in thick mist.

- Each tower also contains an air filtration module inspired by real-world urban filters. It creates a 5-meter radius of purified space—offering momentary relief from the visual and cognitive noise of the mist.

Prototyping & Testing

- We developed a physical prototype embedded in a model streetscape to simulate interaction with a full Might Tower network.

- To simulate the Whispering Mist, we used layered mesh sheets, light diffusion techniques, and strategic sightline blocking to emulate real visual limitations.

- Through iterative testing, we found that red light maintained visibility at longer distances than other hues, confirming it as the core visual signal. The setup also helped us validate the tower placement for adequate reaction time and minimal intrusion.

System Design & Scalability

- Towers are energy-autonomous, powered by kinetic energy captured from cyclists' wheels and activated only when needed to conserve resources.

- We proposed a service-based deployment model: government partners fund installation, and maintenance is handled by modular unit swaps and a proactive reporting app that encourages user engagement. Inspired by IKEA’s emotional design and consumer participation, we envisioned users reporting malfunctioning units, reinforcing community ownership.

Key Insights

- User safety is both physical and psychological. The ability to feel seen and grounded in surreal or ambiguous environments can be just as important as reducing accident rates. Prototyping at the system level—rather than focusing solely on product fidelity—enabled us to test the choreography between people, place, and infrastructure.

- This project honed my ability to frame speculative futures into user needs and to turn surreal challenges into researchable, designable problems. Ultimately, it taught me how to build not just prototypes—but possibilities.

2023

Cycles

A Slow Technology Interface That Reveals Time

Shape-Changing Interface

Product Design

| Role |

|---|

| Interaction Designer / Hardware Prototyper |

| Tech Stack |

| Arduino / 3D Printing |

Cycles is not a utility—it’s a temporal companion. Designed as a physical interface for slow living, it challenges our obsession with precision and speed by revealing time through imperceptible change. Instead of displaying numbers or blinking reminders, it changes shape so slowly that you never see it move—but always find it different when you return.

Problem Framing

Modern life floods us with visual urgency: countdowns, alerts, blinking LEDs. But real time doesn’t behave like that—it flows silently, without notice. We asked: what if a device didn’t try to demand your attention, but instead existed quietly alongside you? Could its movement mirror the invisibility of time itself?

Design Exploration

- We interviewed individuals with fast-paced routines and found a common craving for ambient presence—interfaces that live with you, rather than speak at you. We explored metaphors of natural time: tides, erosion, the shifting of light. These processes don’t ask for attention—but are deeply felt when noticed. Prototypes were shaped by these insights. We deliberately slowed the expansion mechanism to near-invisibility, rejecting real-time responsiveness in favor of ambient subtlety.

Physical Interface & Technical Foundation

- At the core of Cycles is a Hoberman Sphere, mechanically expanded and contracted using an Arduino-controlled motor. The motion is so gradual, it’s nearly imperceptible in real time—but clearly visible over hours.

- A crumpled Japanese paper lampshade covers the sphere, giving the surface a textured softness that diffuses light and hides mechanical complexity. The crumpling also ensures that each unit has a slightly different organic feel.

- LEDs within the structure provide ambient illumination. Though static in brightness, their position shifts subtly as the structure expands—adding a second layer of change to be noticed only over time.

Interaction & Perception

Cycles isn’t meant to be interacted with actively. In fact, we designed it so that you don’t notice it changing while you’re looking at it. But when you come back—from a break, a meeting, a walk—you realize something is different. That’s the experience of time. The lamp becomes a quiet signal that life is moving forward, even if we’re not counting it.

Challenges & Design Decisions

- Designing for imperceptibility was paradoxical. Our goal wasn’t to be seen, but to be noticed in hindsight. We had to find a motor speed and interval that felt just beyond perception—tested through repeated time-lapse comparisons.

- Material choice was also crucial. Early options like crochet and origami failed to support soft, stable movement. Crumpled washi paper hit the balance: translucent, flexible, and symbolically aligned with the beauty of imperfection.

Key Learnings

- Stillness can be a function. When we remove urgency and interaction, we open space for reflection, presence, and ambient awareness. Subtlety is powerful. Interfaces that whisper instead of shout can cultivate deeper emotional connection over time. Design doesn’t always need to provide feedback—it can instead become a mirror of the world around it, gently shifting without explanation.

Reflection

- Cycles helped me rethink how we design for time—not to measure or optimize it, but to feel it. The lamp doesn’t count seconds. It simply becomes different. I learned to design for invisibility—to create meaning not through function or input, but through the slow accumulation of change. This project stays with me as a reminder that UX design isn’t always about doing. Sometimes it’s about being—and being just slightly different the next time someone notices.

2022

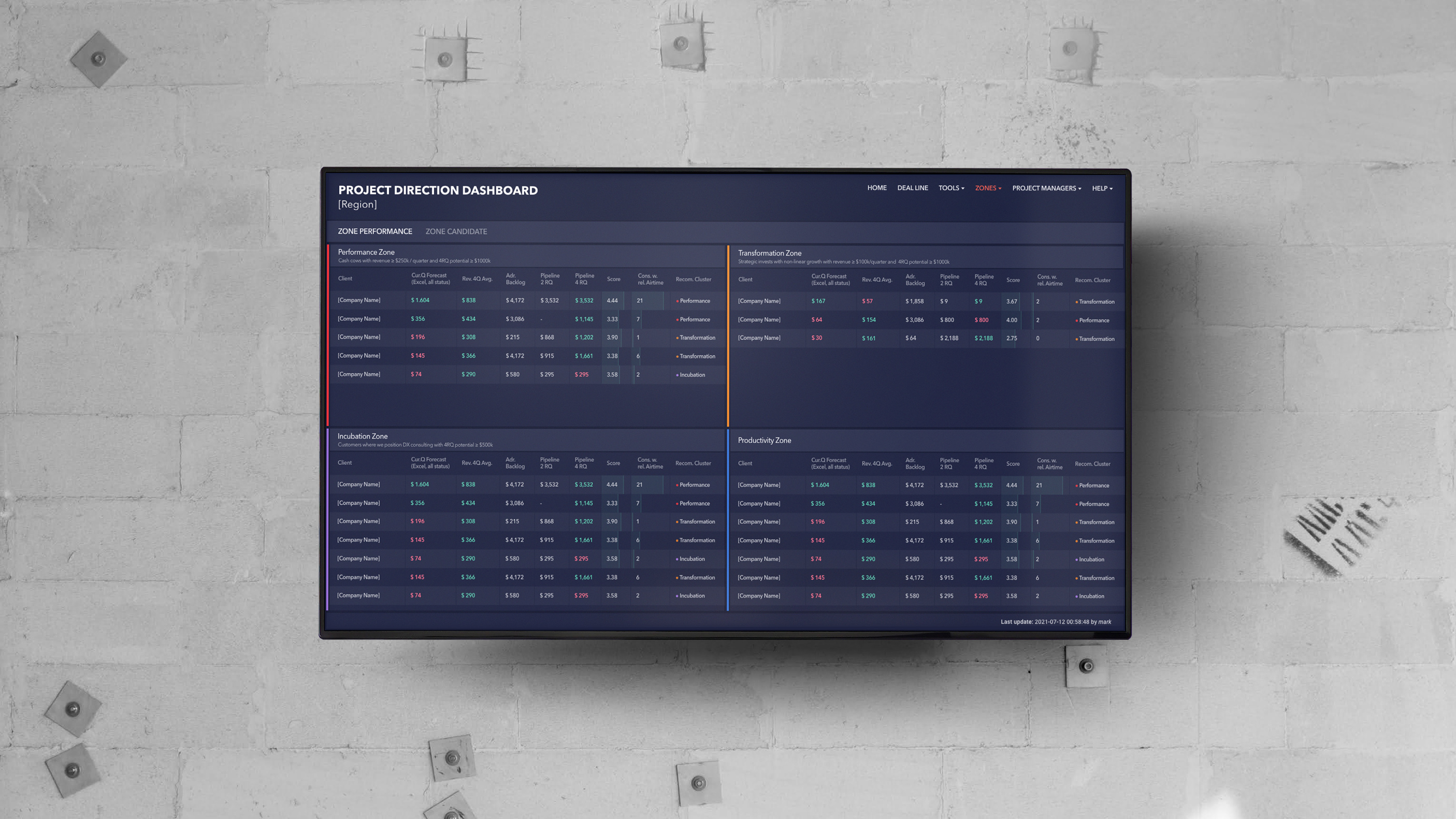

ACS360

Redesigning a Consulting Performance Dashboard

System Redesign

Web Application

| Role |

|---|

| UX/UI Designer / Frontend Engineer |

| Tech Stack |

| React / Node.js / D3.js / Docker |

ACS360 was originally developed to help consulting teams visualize project health, forecast workload capacity, and make allocation decisions. However, the system had become outdated—slow to load, difficult to navigate, and hard to trust. I joined the project to lead the front-end redesign and improve how information was structured, visualized, and interpreted.

Identifying the Problem

We began with contextual inquiries and stakeholder interviews. Consultants reported that they were relying on external spreadsheets because ACS360’s dashboard was too slow and cryptic to use in time-sensitive situations. They struggled to interpret raw tabular data, lacked comparative views over time, and found it difficult to identify which projects were at risk. Our problem became clear: the system had data—but it wasn’t providing insight.

UX Research & Redesign Strategy

- Through affinity mapping and persona-building, we distilled the needs of two key user groups: team leads who wanted quick performance snapshots, and project managers who needed deeper insights into trends over time.

- We conducted card sorting exercises to re-group data hierarchies and reorganize the dashboard into modules based on workflow logic: overview → risk drill-down → capacity planning.

- We introduced progressive data reveal and micro-interactions to reduce cognitive overload. Users could collapse modules and selectively explore project-level analytics only when needed.

Implementation & Visual Design

- We adopted a dark UI theme to support high visual density and reduce eye strain. Key metrics—like forecast accuracy and deviation from plan—were visualized through color-coded charts and trendlines powered by D3.js.

- We replaced monolithic data loading with lazy fetching, improving loading time by over 60% in high-volume dashboards.

- React’s component-based architecture allowed us to modularize complex views into reusable, maintainable units. Each tile now had a purpose: to surface actionable signals, not just raw values.

Key Takeaways & Reflection

- Performance is a UX issue. Long load times discouraged trust and usage, even when the system was technically functional.

- People think in workflows, not in datasets. Restructuring the dashboard to follow decision-making sequences made the tool feel intuitive again.

- Interactive visualizations encouraged exploration. Once users could hover, filter, and zoom into risk trends, they began using the system to anticipate problems rather than just react to them.

- ACS360 showed me that UX design is about trust—not just usability. Users needed to believe the system would support their judgment, not overwhelm or mislead them.

- Ultimately, we created a dashboard that didn’t just look better—but actually helped people make better decisions faster.

2022

Overwatch

Context-Aware Time Tracking Across Environments

Human Factors Engineering

Interaction Design

Ubiquitous Computing

| Role |

|---|

| UX/UI Designer / Concept Designer |

| Tech Stack |

| Figma |

Overview

Overwatch reimagines time tracking as a system that spans physical and digital space. Instead of forcing users to recall and re-enter activities, it captures context through a network of soft interactions: calendar sync, room sensing, and brief, intentional UI input. It’s a minimal interface layered onto an ambient system—designed to fit into how people already move through work.

Problem Framing

Many knowledge workers—especially those balancing multiple projects, clients, or teams—struggle with fragmented time tracking tools. Retrospective reporting is cognitively demanding, and duplicative processes across internal systems and external formats lead to frustration. We asked: what if time tracking wasn’t a task, but an ecosystem of subtle cues that worked together to support memory, not replace it?

Design Goals

- Make time tracking feel ambient, not burdensome—capturing context across devices, spaces, and calendars.

- Ensure interoperability with diverse workflows (corporate portals, spreadsheets, calendars).

- Balance automation with autonomy: users remain in control of what is recorded and submitted.

System Concept & Ubiquitous Touchpoints

- The system integrates with personal calendars to pre-fill scheduled work time and propose intelligent matches with ongoing tasks.

- Smart badges (or mobile device signals) register presence in workspaces associated with specific projects—capturing task context passively as users move through environments.

- For unstructured time, a lightweight mobile interface allows for quick check-ins or task switching with minimal friction.

- Time entries can be reviewed, corrected, and exported to match the structure of whatever system the organization or client uses.

Key Takeaways & Reflection

- Ubiquitous systems thrive when they reduce mental overhead, not just input effort. Overwatch created value by lowering the need to remember, revisit, and reconcile data.

- Good UX lives in the seams—not just screens. This project pushed me to think across APIs, calendars, badges, and submission logic as a single, unified interaction space.

- More than solving time tracking, this project reframed it—less as a burden, and more as an opportunity to support memory, agency, and continuity across modern work.

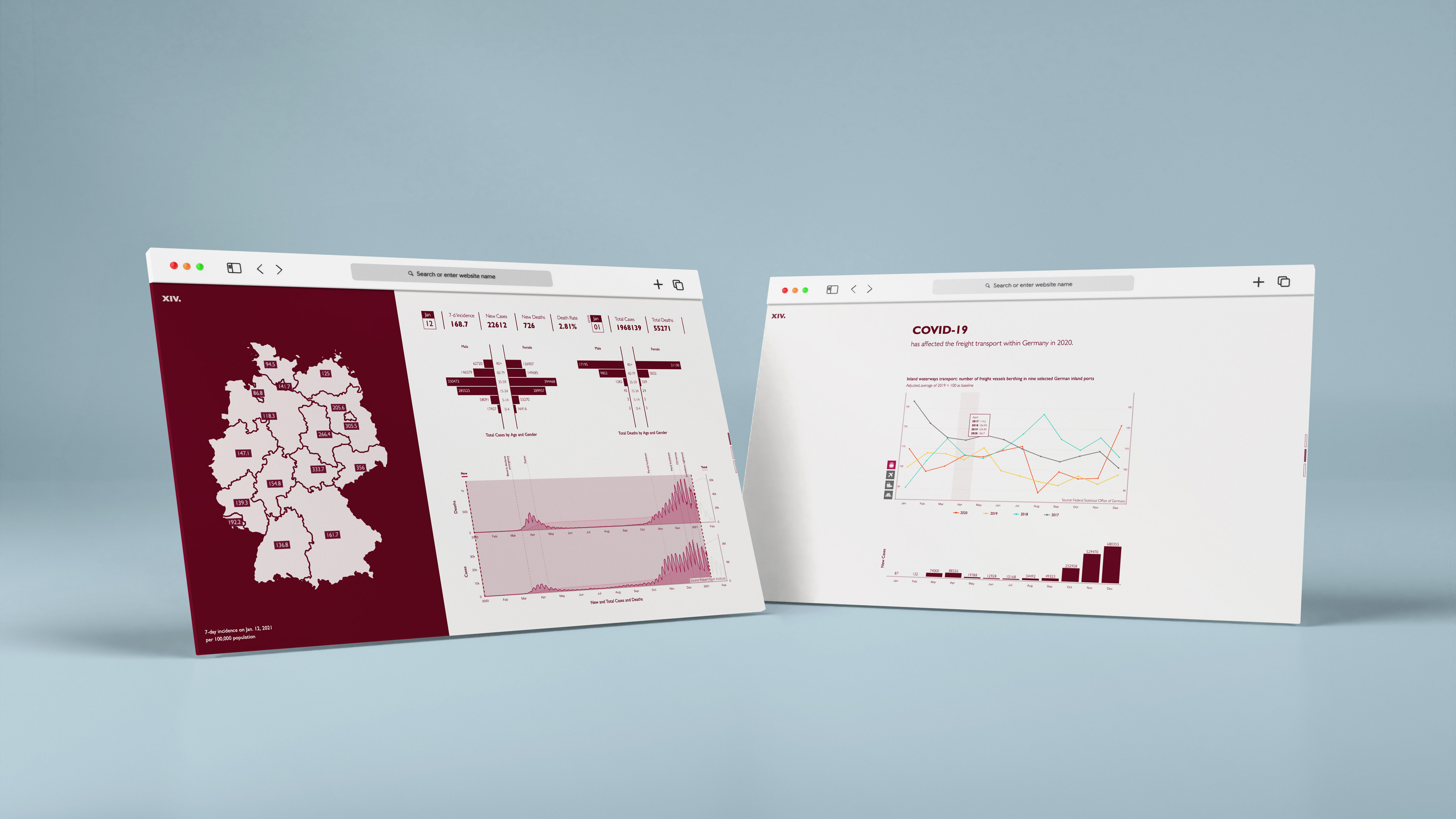

2021

Pandemic Dashboard

Making COVID-19 Data More Human

Data Visualization

Web Application

| Role |

|---|

| UX/UI Designer / Frontend Engineer / Data Analyst |

| Tech Stack |

| Python / D3.js / HTML / CSS |

| Live Demo |

At the height of the COVID-19 crisis, public dashboards were flooded with numbers—but often failed to tell a meaningful story. We designed the Pandemic Dashboard to transform raw case and death statistics into a humane, interactive experience that helped people not just understand the data, but engage with it emotionally and contextually.

Problem Framing

The German public faced a flood of disjointed information from news articles, government briefings, and social media. We saw a clear UX gap: while the data was technically open, it was not legible to most people. The challenge was to make complex, temporal datasets interpretable—and to allow for exploration without overwhelming users with noise.

Research & Design Process

- We began with a survey and interviews, learning that users wanted to track regional trends, compare time periods, and explore possible connections between case counts, deaths, and economic impact.

- Inspired by scrollytelling and news graphics, we prioritized clarity over density—designing for focused, purposeful interaction rather than dashboard complexity.

Visualization & Interaction Design

- We used D3.js to build interactive charts with smooth transitions, filtering, and hover-driven details. Charts were rendered as SVGs to ensure crisp resolution across devices.

- CSS Grid and Flexbox enabled a responsive layout that adapted to larger screens (desktop, iPad), which our research showed was the preferred format for analytical browsing.

Challenges & Iteration

One of the biggest design challenges was helping users explore without becoming disoriented. We solved this by implementing fixed headers, subtle animations to signal change, and embedded tooltips that revealed context on demand. Performance optimization was also key: datasets were sliced by region and time so the system only rendered visible data, ensuring speed and responsiveness.

Key Takeaways

- Effective data storytelling isn’t about showing everything—it’s about designing paths for exploration that balance detail and clarity.

- Even technical tools carry emotional weight. Many users described feeling 'informed but not overwhelmed'—a core UX goal in crisis contexts.

- Interactivity can create a sense of agency. By allowing users to adjust views and timeframes, we gave them the power to ask their own questions of the data.

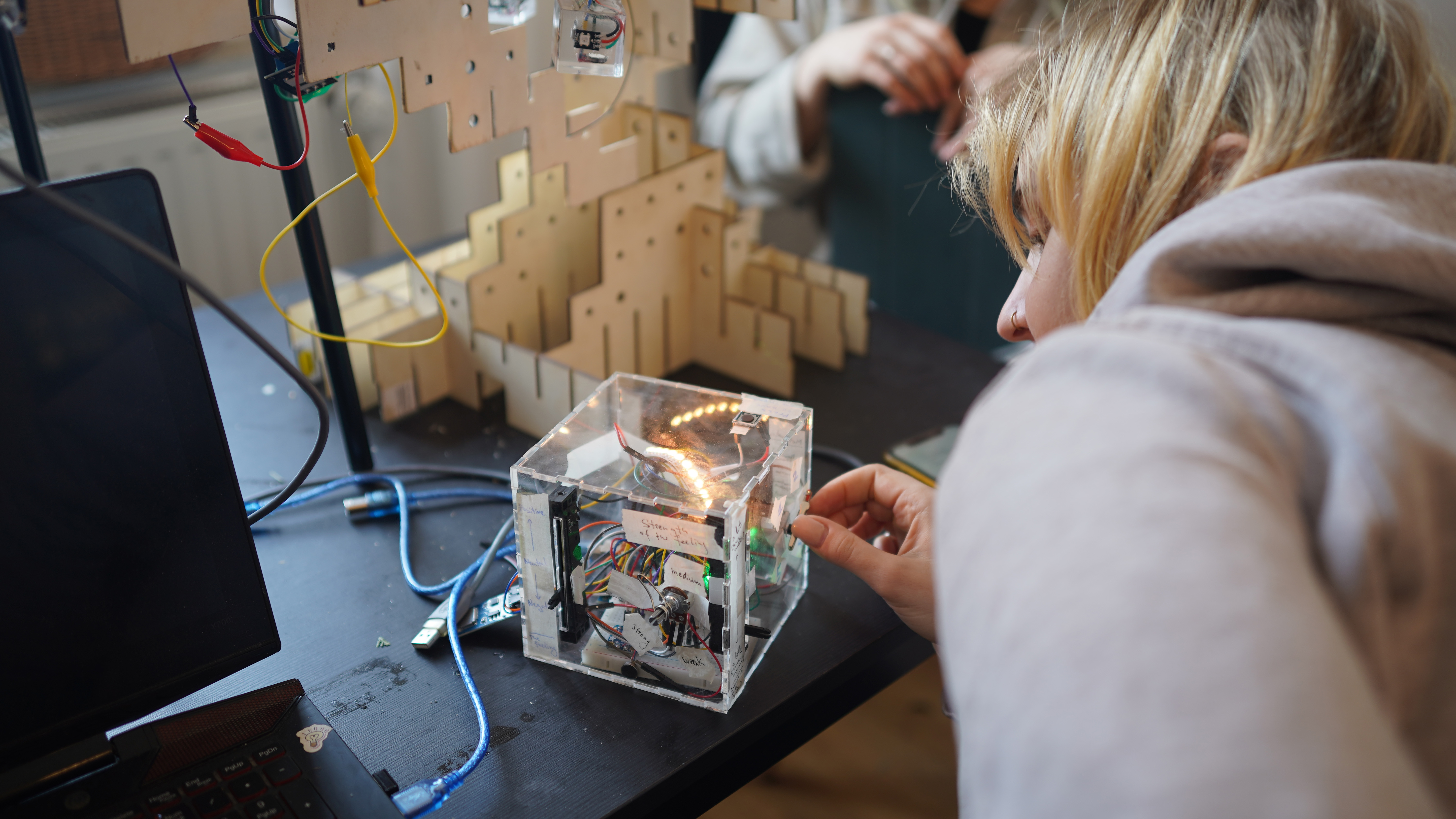

2021

Touchy Bonsai

Revealing the Hidden Data of Human Touch

Tangible User Interface

Research through Design

| Role |

|---|

| Designer / Hardware Prototyper / Researcher |

| Tech Stack |

| Arduino / Epoxy Casting / Touch Sensors / Stepper Motor / LEDs / Vibration Motor |

Touchy Bonsai is a data sculpture that materializes the emotional traces of interpersonal touch. It invites users to reflect on past interactions through an open-ended, haptic dialogue. Built as part of a research-through-design process, it challenges the idea that data must always be encoded, categorized, or interpreted via language.

Context & Challenge

During a period marked by physical distance and increased digital communication, we asked: what does it mean to 'store' a moment of touch? And how might such moments be revisited—not through screens, but through tangible, sensory interaction? We aimed to design a system that preserves and reflects emotional residue, without assigning it predefined meaning.

Approach

- We conducted self-ethnographic tracking of our own social touch interactions, documenting emotional shifts and contextual nuances. From these diaries, we extracted what we called 'hidden data'—affective, embodied qualities we couldn’t easily put into words.

- Rather than visualizing these data points, we chose to explore how they could be experienced physically. We rejected the model of encoding/decoding in favor of direct manipulation and projection, allowing users to author their own interpretations through touch.

Design Process

- The system consists of an input cube and the bonsai sculpture. Users adjust sliders and knobs on the cube to engage in a ritual-like interaction. Each movement triggers subtle light and haptic feedback on the tree, such as a glowing resin fruit or a gentle vibration—no two experiences are alike.

- Resin-cast 'fruits' hang from the tree’s branches and shift their position over time through a stepper motor. These fruits act as open, memory-like nodes—each suspended between form, light, and haptic motion.

User Testing

We conducted a pilot study with international students who shared the feeling of social distance and relational disconnection. After journaling five meaningful interactions, participants used the system to express and revisit those experiences. Rather than interpreting the responses as feedback, they treated the tree as a companion—one that responds, breathes, and remembers without judgment.

Key Insights

- Participants preferred the ambiguity of the experience, noting that it freed them from overthinking or categorizing emotion.

- The nonverbal interaction made the system feel meditative, grounding, and strangely personal—despite being shared across users.

- Users described the tree as 'alive' and 'feelingful'—not because it simulated human traits, but because it gave space for human reflection.

Reflection

- Touchy Bonsai taught us that not all systems need to explain. By inviting projection instead of imposing feedback, we created a medium for emotional authorship.

- This project demonstrated how haptics can move beyond realism and into resonance—crafting interaction spaces for memory, ambiguity, and care.

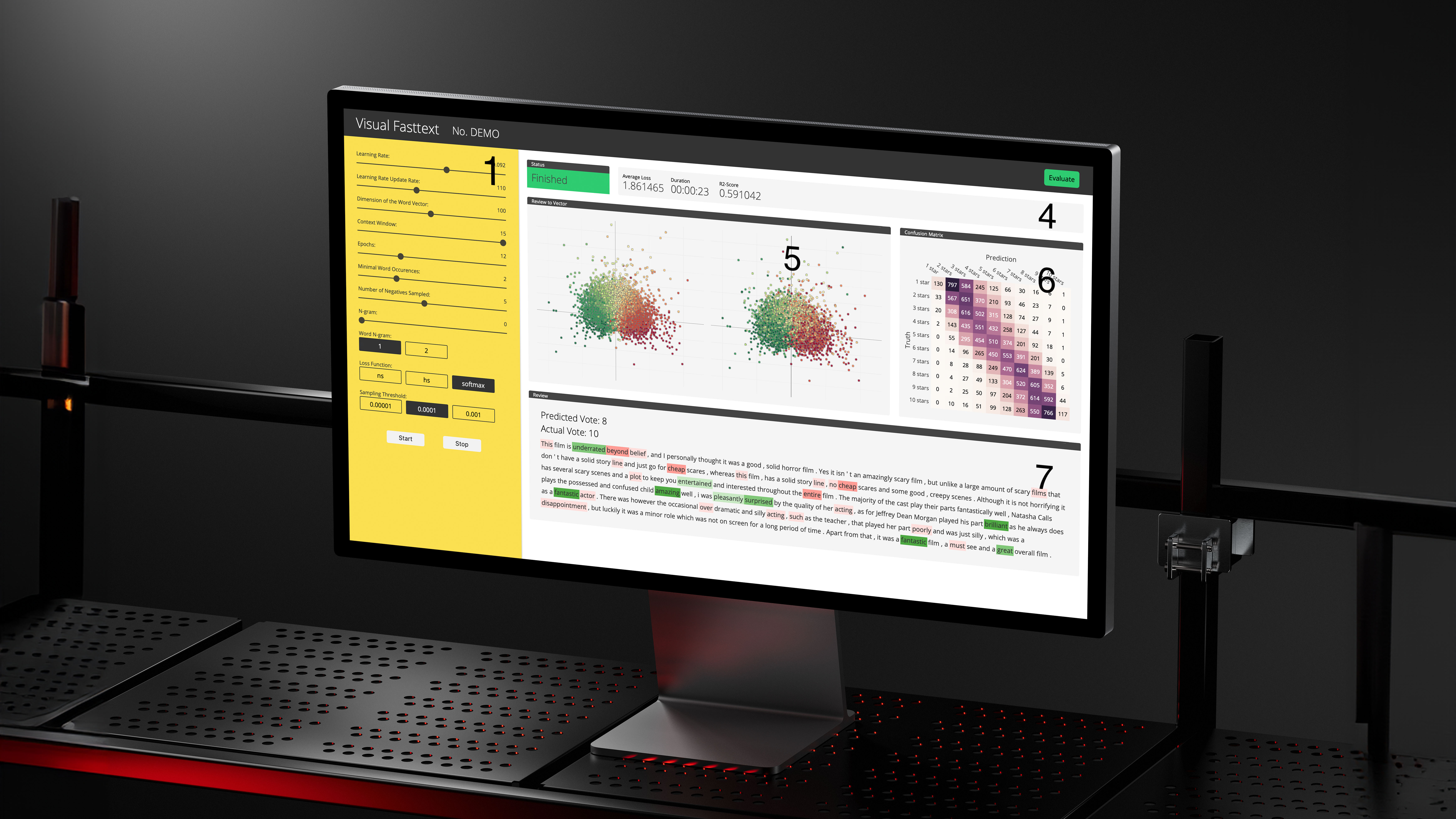

2019

VisTuning

Making Machine Learning Trustworthy Through Visualization

UX Research

Human-in-the-Loop ML

| Role |

|---|

| Researcher / Full Stack Engineer |

| Tech Stack |

| Python / NLP / HTML / CSS / Django |

Overview

This project tackled the gap between powerful machine learning systems and user understanding. I explored how interactive visualization could support non-expert users in understanding and trusting model outputs—specifically during hyperparameter tuning for text classification tasks.

Problem Framing

In real-world ML workflows, domain experts often have limited control or insight into the systems they rely on. This opacity undermines trust—especially when users can’t see how model configurations affect outcomes. The core question: how can we design visualization tools that help users reason about and feel confident in machine learning behavior?

Research & Design Process

- I built three interface variants: a baseline terminal setup, a mid-level visual interface, and a full-featured version with document embedding views, emotional word indicators, and training history.

- Participants used the system to train models on movie review sentiment data. Afterward, they played a prediction game that measured how much they relied on model decisions.

- To measure cognitive load, I implemented the NASA-TLX scale across all conditions—capturing perceived mental effort, frustration, and temporal pressure.

Key Interface Features

- Embedding Visualization – Sentences and reviews were plotted in 2D (via SVD), enabling participants to explore the semantic landscape shaped by different hyperparameter choices.

- Emotion Word Highlights – The model’s most influential terms were color-coded by sentiment weight, giving users a peek into how predictions were formed.

- Recovery Timeline – A scrollable record of past training configurations allowed participants to revisit previous models, compare performance, and iterate.

Evaluation & Findings

- A between-subjects study with 29 participants showed that the full-visual interface significantly increased model trust scores. Users in this group revised their decisions to align with the system more often than others.

- NASA-TLX scores revealed an interesting tradeoff: while the full-featured interface demanded more mental effort than the terminal baseline, users reported lower frustration and greater satisfaction—indicating that **meaningful cognitive load** can support trust.

- The mid-level visualization group showed more mixed results—demonstrating that partial transparency without clarity may overwhelm rather than support decision-making.

Key Learnings

- Cognitive effort isn’t inherently negative—users can accept higher effort if they perceive agency, clarity, and control in return.

- This project helped me see UX research as a bridge between system complexity and human comprehension. The goal isn’t just to simplify—but to empower.

- It laid the foundation for my continued interest in human-centered AI design—where interfaces help people not just interact with, but truly collaborate with intelligent systems.

On mighty wings the spirit of history floats through the ages, and shows—giving courage and comfort, and awakening gentle thoughts—on the dark nightly background, but in gleaming pictures, the thorny path of honor, which does not, like a fairy tale, end in brilliancy and joy here on earth, but stretches out beyond all time, even into eternity!